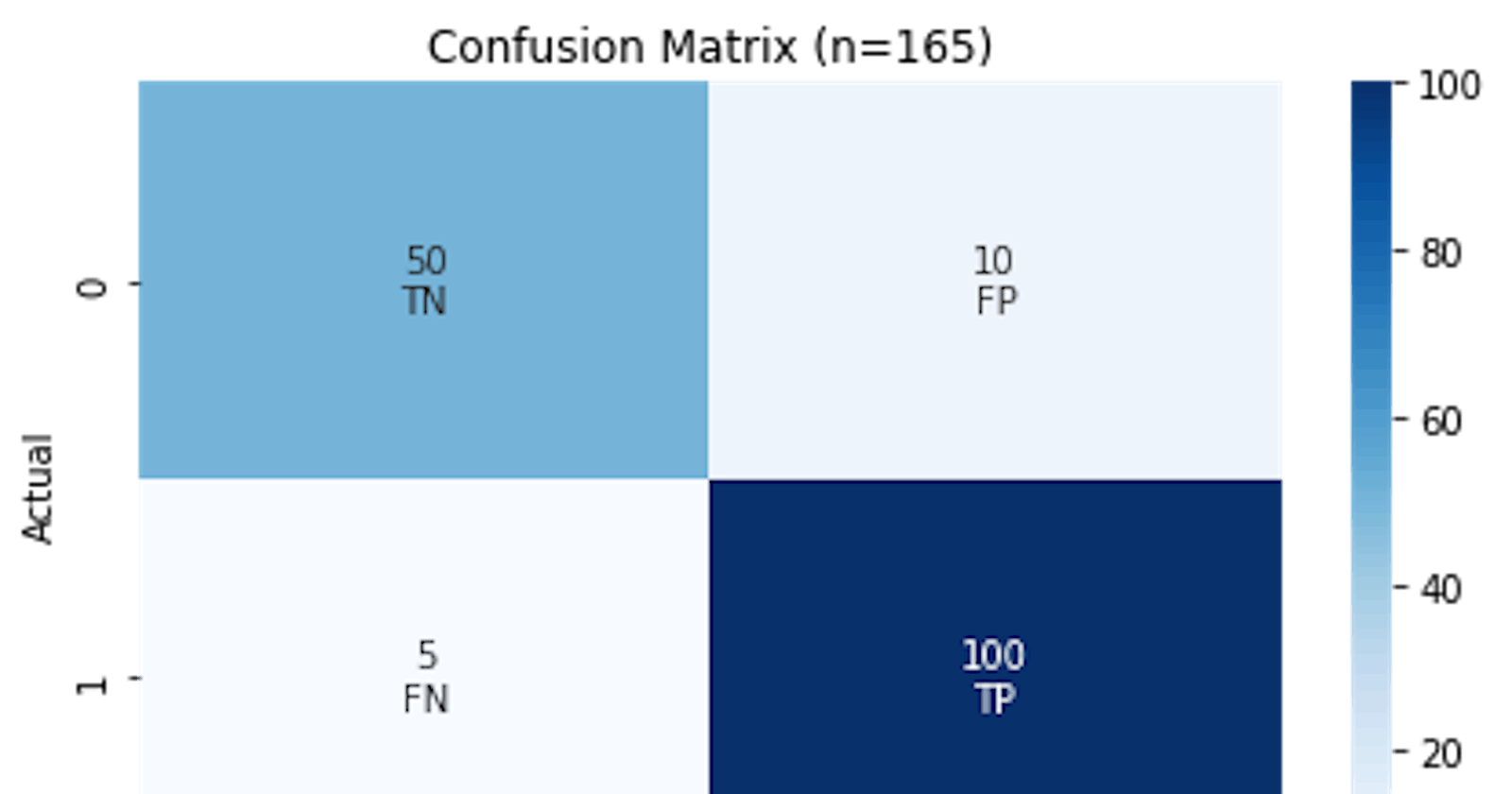

A Confusion Matrix is a performance measurement tool of a classification model. It's a table with the layout of:

| Predicted: Yes | Predicted: No | |

| Actual: Yes | TP | FN |

| Actual: No | FP | TN |

The matrix has 2 classes (predicted and actual) and 4 categories:

True Positives (TP): These are cases where the positive class that the model predicted and the actual target value are both positive.

True Negatives (TN): The model predicted value and actual value are both negative.

False Positives (FP): The model predicted 'yes', but the truth is 'no'.

False Negatives (FN): The model predicted 'no', but the truth is 'yes'.

The 'positive' refers to the presence of a disease, in this case, and the 'negative' refers to the absence of the disease. Based on the example matrix above:

TP = 100: The model made true 100 positive estimations.

TN = 50: The model made true 50 negative estimations.

FP = 10: The model made false 10 positive estimations (they were actually negative).

FN = 5: The model made false 5 negative estimations (they were actually positive).

Other performance metrics, like accuracy, can be derived from this matrix are:

Accuracy: The proportion of correct classifications out of all classifications made. It measures how often the classifier makes the correct prediction. It's the most intuitive performance measure.

Accuracy = (True Positives + True Negatives) / Total ObservationsRecall (Sensitivity, True Positive Rate): The proportion of correct positive predictions out of all correct predictions. High recall indicates that the model is good at identifying positive cases.

Recall = True Positives / (True Positives + False Negatives)Fall-out (False Alarm Ratio, False Positive Rate): The proportion of incorrect positives out of all actual negative cases, which becomes crucial when the cost of a false positive (e.g., a legitimate email in spam detection) is high.

Fall-out = False Positives / (False Positives + True Negatives)Specificity: Opposite to the recall, this is the proportion of correct negative predictions out of all negative predictions. High specificity means the model is good at identifying negative cases.

Specificity = True Negatives / (True Negatives + False Positives)Precision: The proportion of correct positive predictions out of all positive predictions. High precision means that the model's positive prediction is reliable.

Precision = True Positives / (True Positives + False Positives)F1 Score: The F1 Score is a trade-off between precision and recall. A high F1 score means that the model has a good balance between precision and recall. It's useful in the case of imbalanced classes because it takes into account both false positives and false negatives.

F1 Score = 2 * ((Precision * Recall) / (Precision + Recall))ROC Curve and AUC:

An ROC curve (Receiver Operating Characteristic curve) and AUC score (Area Under the ROC Curve) provide a visual and quantitative measure of a binary classifier's performance across all possible classification thresholds.

Image source: Google's Machine Learning Crash Course - Classification: ROC and AUC

We can understand the relationship between sensitivity and specificity from the TPR (True Positive Rate) and FPR (False Positive Rate) the ROC curve plots at different thresholds.

This allows us to inspect and select the threshold that gives a better trade-off between sensitivity and specificity.

When the classifier catches all the true positives and drops all false negatives at all thresholds, the graph will draw a straight line at TPR = 1 along with the x-axis, in an ideal situation.

An AUC of 1.0 means that the model correctly classifies all positive and negative instances. An AUC of 0.5 means that the model is random-guessing and not able to distinguish the classes.

These metrics (except AUC) with the example cases of TP = 100; TN = 50; FP = 10; FN = 5 just will be:

accuracy = (TP + TN) / (TP + TN + FP + FN) = 150/165 = 0.9090909090909091recall = TP / (TP + FN) = 100/105 = 0.9523809523809523fall_out = FP / (FP + TN) 10/60 = 0.16666666666666666specificity = TN / (TN + FP) = 50/60 = 0.8333333333333334precision = TP / (TP + FP) = 100/110 = 0.9090909090909091f1_score = 2 ((precision recall)/(precision + recall)) = 0.9302325581395349